In recent times, AI, as never before, has become accessible, exciting, and maybe even scary. Now, virtually anyone can interact knowingly or unknowingly with an AI. Easier accessibility leads to widespread excitement about AI capabilities and, at the same time, to increased concern about how AI is going to influence and change our society. But what does AI really mean?

All the same but all different

Artificial Intelligence is a true nightmare to define, not only for people in charge of legislation but also for technical experts. It is an umbrella term that covers many different aspects and can refer to everything that allows a machine to make a decision beyond randomness. It can span from statistics to functional analysis to neural networks, often involving a mixture of all these. For this reason, when we hear of a product/tool/company using AI, this might refer to many different things.

In recent times, we got used to two types of technologies: those able to classify and, more recently, those able to generate their response. Classification AI are those that, when presented with a previously unseen set of data (e.g., an image or some text), can say which category this set belongs to (hey, human, this is the picture of a cat!). Online, there are available image classification tools, and these types of technologies are rapidly gaining reputability in critical fields, for example, cancer detection, for their ability to complement the work of humans and boost diagnostic capabilities. Another example is sentiment analysis tools, which, given a text, like, for example, a review of a restaurant, will classify it as positive, negative, angry, or hungry. More recently, a lot of attention has been paid to generative AI, like those that can generate images out of a description, like Midjourney, and those that can generate a text based on a question or an instruction of the user, like chatGPT.

Your training data determines how good your model will perform

Behind the success of these powerful tools, there is mainly one thing: an enormous amount of good training data. Training data are those data meant to give an example to the AI of what things should look like, and usually, they require an enormous amount of human work to be prepared and quality assured (most of you by now have probably heard of bias problems in training data). To understand the scale of effort needed to create training data, it is enough to think that each of us created some training data at a certain point. Have you ever been asked to find an element in a picture to confirm that you are not a robot? At that moment, you are “labelling” an image to train an AI model. Labelling means assigning a short text or description (label) to your data points. These tools are, therefore, referred to as supervised learning tools, in which the learning is supervised by giving a labelled set of example data.

What if labelled data are not available?

We often sit on a pile of unlabelled data since labelling is time-consuming and costly. To efficiently label data to solve a specific problem, one has to utilise the time of people who know the context, and these are usually highly skilled workers. Also, having a team of people to label the data is better to ensure multidisciplinary coverage and reduce biases. Most companies I know don’t have the resources to store and maintain labelled data.

Unsupervised learning problems are trained without the help of labels and context, relying only on patterns present in the data. As a person who works in anomaly detection, I face the daily challenge of dealing with mostly unlabelled data. Anomaly detection is a classification problem, and it means trying to understand and alert when your data behave differently than in the past. Most of the time, this has to be done unsupervised, not knowing if and when incidents happened. Knowing the location in time of past incidents, we could train a model in a supervised way, and this would outperform the unsupervised anomaly detection model in precision when detecting future incidents. This is precisely why we talk about anomaly detection and not incident detection. An anomaly is something out of the ordinary but not necessarily a problem or an incident.

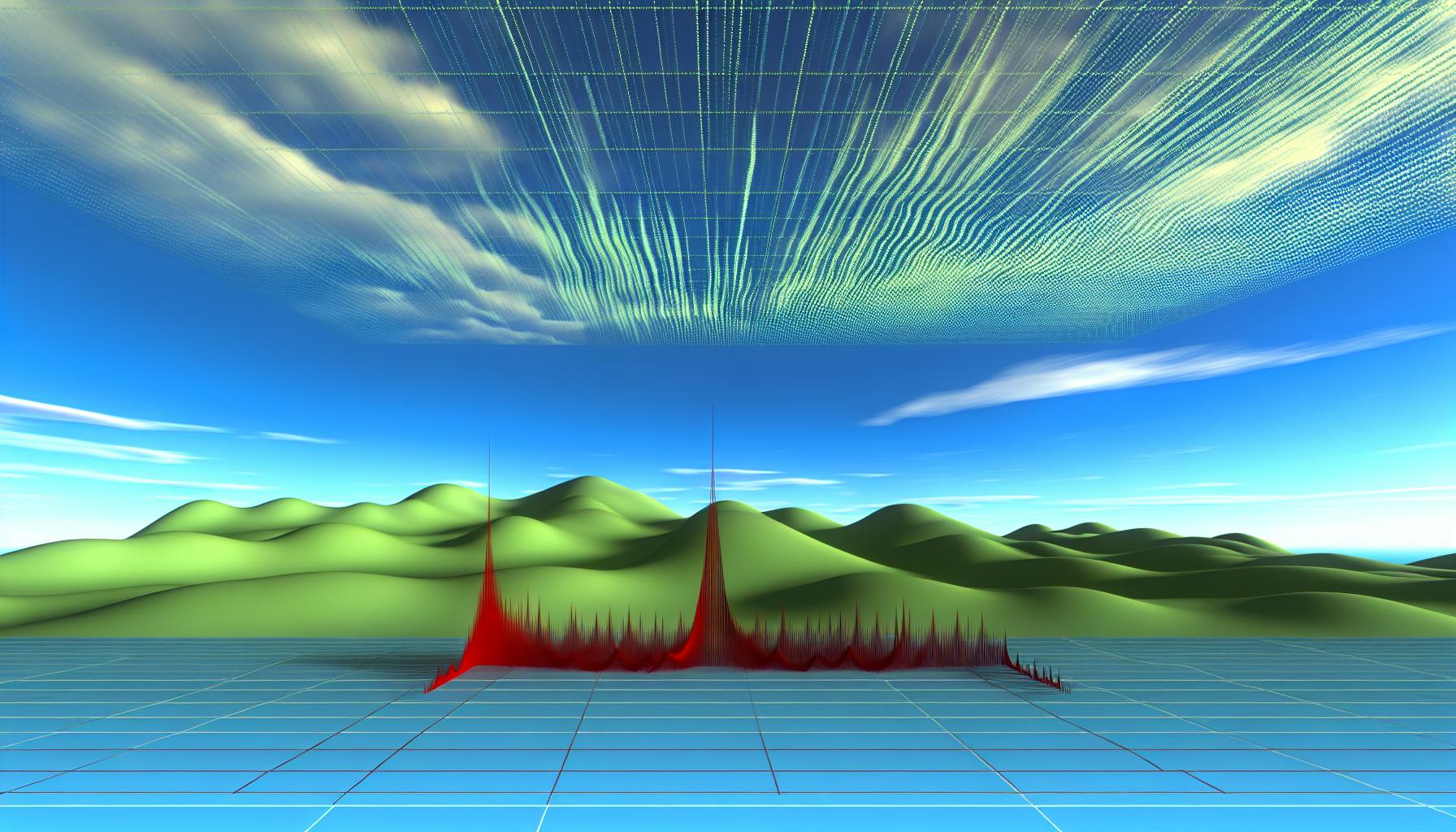

This is where Eyer anomaly detection stands: data are fed into Eyer, and the most common behaviours are highlighted; the user is alerted when the data diverges substantially from behaviours observed in the past. Since Eyer deals with the data “as they come”, in an unsupervised way, it works under the assumption that past data can contain anomalies. This is the principle at the base of our multiple baseline approach, where we have a main baseline describing the most frequent scenario observed in the past and secondary baselines that can correspond to infrequent behaviour that could hide possible anomalies but could also be normal.