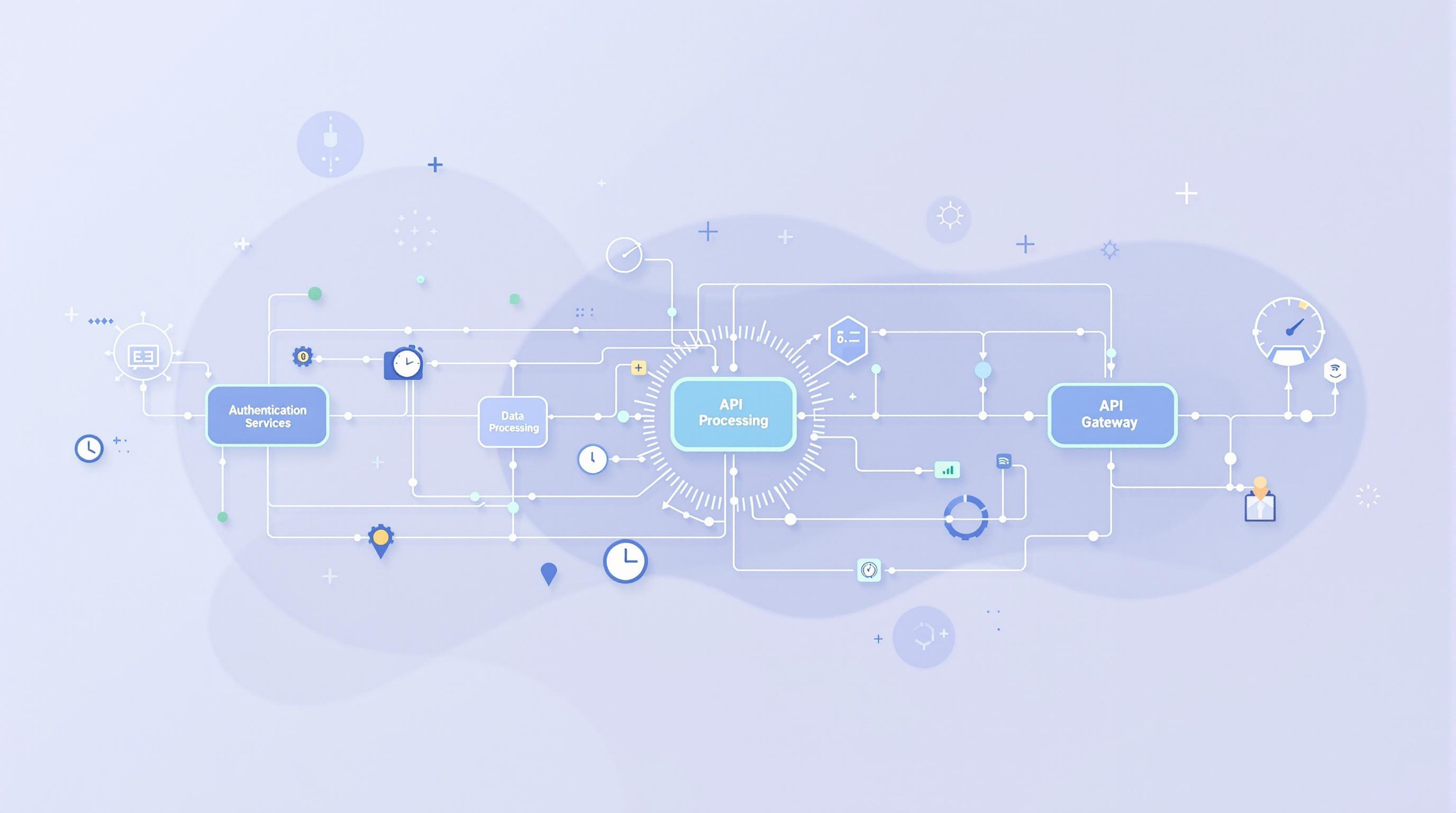

Want to keep your API gateway running smoothly as traffic grows? Here's how to scale up and stay available:

- Load Balancing: Spread requests across servers

- Add More Servers: Increase processing power

- Smart Caching: Store popular data for quick access

- Limit Requests: Cap traffic to prevent overload

- Use Circuit Breakers: Cut off failing services

- Health Checks and Backups: Catch issues early

- Cloud-Based Setup: Leverage flexible cloud resources

Quick Comparison:

| Technique | Ease of Use | Scalability | Reliability |

|---|---|---|---|

| Load Balancing | Easy | High | Medium |

| More Servers | Medium | High | High |

| Caching | Easy | Medium | Medium |

| Request Limits | Easy | Low | High |

| Circuit Breakers | Medium | Medium | High |

| Health Checks | Medium | High | High |

| Cloud Setup | Easy | High | Medium |

Remember: There's no one-size-fits-all solution. AWS API Gateway promises 99.95% uptime, but that wasn't enough for DAZN's sports streaming needs. Mix and match these techniques based on your specific requirements.

Related video from YouTube

Load Balancing

Load balancing is like having multiple checkout lanes at a supermarket. It sits between clients and your API gateway servers, spreading incoming requests across multiple servers. This prevents any single server from getting overwhelmed.

Here's why it's useful:

- Spreads requests evenly

- Keeps your API running if a server fails

- Makes scaling easier - just add more servers

- Boosts performance by reducing load on each server

Real-world example: In 2021, Etsy used load balancing to handle 3 billion daily API calls during the holiday season - a 50% traffic increase.

| Load Balancing Method | Best For |

|---|---|

| Round Robin | Simple requests |

| Least Connections | Varied request complexity |

| IP Hash | User session consistency |

| Weighted Round Robin | Different server capacities |

Tips for setup:

- Use health checks to spot failing servers

- Consider cloud-based load balancers

- Choose the right algorithm for your API

2. Adding More Servers

Adding more servers to your API gateway is like opening extra checkout lanes at a busy store. It helps handle more requests at once.

Here's why it works:

- More servers = more requests processed simultaneously

- If one server fails, others keep working

- Fewer requests per server means faster processing

Let's look at a real-world example:

AWS API Gateway can handle 10,000 requests per second, with an extra 5,000 for spikes. But what if you need more?

DAZN, a sports streaming service, found out the hard way. They needed to push updates to millions of users in real-time. AWS API Gateway's limit of 1.8 million new WebSocket connections per hour wasn't enough.

So, how do you add more servers the right way?

1. Use load balancing

Spread requests across your servers evenly. This keeps any single server from getting overloaded.

2. Set up health checks

Keep an eye on your servers. If one struggles, take it out of rotation until it's back to normal.

3. Plan for growth

Don't wait until you're at capacity. Add new servers before you need them.

4. Consider cloud options

Cloud platforms make it easier to add or remove servers as needed.

Here's a quick comparison of scaling options:

| Scaling Method | Pros | Cons |

|---|---|---|

| Static Scaling | Simple to set up | May waste resources during low traffic |

| Dynamic Scaling | Adapts to traffic changes | More complex to configure |

| Geographic Distribution | Improves global performance | Requires more management |

More isn't always better. Start with at least two servers for high availability, then add more based on your needs.

The minimum requirements to achieve high availability in API Gateway are two API Gateway instances, three API Gateway Data Store instances, and two Terracotta Server instances (Active-Passive). - Software AG

3. Smart Caching

Think of caching as your API gateway's memory bank. It stores popular data for quick access, skipping the backend servers.

Here's the process:

- First request: Gateway fetches and stores data.

- Later requests: Serves stored copy.

- Data refreshes after a set time (TTL).

Why cache? Speed and efficiency:

- Faster responses

- Less backend work

- Lower costs

Real-world example: AWS API Gateway handles 10,000 requests per second. But for DAZN's real-time sports updates? Not enough.

Setting up AWS API Gateway caching:

- Open stage settings

- Pick cache size

- Set TTL

- Deploy

| Cache Type | Good | Bad |

|---|---|---|

| Client | Fewer network calls | Limited control |

| Server | Full control | Uses server resources |

| CDN | Global reach | Tricky setup |

Pro tips:

- Cache GET requests

- Customize for specific methods

- Use ETags for smart client caching

From the field:

"We cache hot docs for 5 minutes in our knowledge base API. After the first search, it's all cache for that window." - Tech Startup API Dev

4. Limiting Requests

API gateways need to handle traffic like a pro. That's where request limiting comes in. It's your API's bouncer, keeping the party under control.

Here's the deal:

1. Set a cap

Pick your limits. For example:

| Time Frame | Request Limit |

|---|---|

| Per second | 10 |

| Per minute | 100 |

| Per hour | 1,000 |

2. Track requests

Count 'em up, usually by IP or API key.

3. Enforce limits

When someone hits the ceiling:

- Reject extras (hard throttling)

- Slow things down (soft throttling)

- Queue for later

Real-world examples:

"Weather.com API allows 1,000 requests per hour on their free tier. No server meltdowns during heatwaves!"

"Stripe uses a token bucket system. It handles sales spikes while keeping things in check long-term."

Cloudflare's API Shield offers flexible limits:

| Setting | Example |

|---|---|

| Criteria | User Agent = "MobileApp" |

| Rate | 100 requests / 10 minutes |

| Action | Challenge suspicious requests |

This stops data scrapers and attacks cold.

Smart moves:

- Use multiple tiers (second, minute, hour)

- Store limit data in Redis for distributed setups

- Include limit info in headers so clients can play nice

sbb-itb-9890dba

5. Using Circuit Breakers

Circuit breakers act as safety switches for your API gateway. They cut off requests when things go south, stopping system overload.

Here's the gist:

- Watch API calls

- Spot failures or slowdowns

- Cut off traffic to struggling services

- Let limited traffic through after a break

Circuit breakers have three states:

| State | What it means |

|---|---|

| Closed | All good, requests flow |

| Open | Problems found, requests blocked |

| Half-Open | Testing if service is back |

Why bother? Circuit breakers:

- Stop domino-effect failures

- Give failing services a breather

- Keep your system running

Real-world example:

Netflix uses circuit breakers to handle traffic spikes and outages. During the 2020 lockdowns, they saw a 16% jump in global streaming. Circuit breakers helped keep things smooth.

To set up circuit breakers:

- Pick failure limits (like 5 errors in 10 seconds)

- Set cooldown times (30 seconds in open state)

- Use tools like Hystrix (Java) or Polly (.NET)

Pro tip: Set different limits for critical and non-critical services.

"Circuit breakers are a must-have for high-traffic API gateways. They're your first defense against cascading failures", says Adrian Cockcroft, ex-Cloud Architect at Netflix.

Don't forget: Use circuit breakers for slow responses too, not just errors. A slow service can be as bad as a dead one.

6. Health Checks and Backup Systems

Health checks and backups keep your API gateway running smoothly. They catch issues early and provide a safety net.

Here's how to set up health checks:

- Check often (every 5 minutes)

- Test everything (server health, app status, processes)

- Set clear limits ("healthy" = response time under 200ms)

- Use auto-alerts

David Yanacek, Senior Principal Engineer at AWS, says:

"Health checks detect and respond to these kinds of issues automatically."

For backups, use both database-native and declarative types. It gives you more options if things break.

| Backup Type | What It Does | When to Use |

|---|---|---|

| Database-native | Copies whole database | Quick restores |

| Declarative | Manages config files | Flexible recovery |

For Amazon API gateways:

- Export APIs using REST or HTTP

- Use SAM or CloudFormation

- Save API definitions in OpenAPI or Swagger

Tyk users, try this backup script:

./backup.sh export --url https://my-tyk-dashboard.com --secret mysecretkey --api-output apis.json --policy-output policies.json

Always back up before upgrades.

For monitoring, watch:

- Throughput

- Error rates

- Latency

Set up Cloudwatch alarms for each. They'll help you spot trends and fix issues fast.

7. Cloud-Based Setup

Cloud API gateways offer flexibility and reliability. They scale easily, handle traffic spikes, and provide global reach.

Here's why cloud setups rock:

- Auto-scale with demand

- Reduce latency with global distribution

- Quick updates without downtime

- Pay-as-you-go pricing

Popular cloud API gateways:

| Gateway | Key Feature | Market Share |

|---|---|---|

| Microsoft Azure | Wide integration | 71.10% |

| Amazon API Gateway | Serverless support | 9.37% |

| Gloo Edge | Kubernetes-native | - |

Amazon API Gateway shines with serverless setups, pairing well with AWS Lambda for auto-scaling systems.

"AWS API Gateway provides a 99.95% uptime guarantee", says an AWS representative.

That's just 4.5 hours of potential downtime per year.

Amazon API Gateway can handle:

- 10,000 API requests per second

- 1.8 million new WebSocket connections per hour

But it's not for everyone. DAZN, a sports streaming service, found these limits too low for real-time updates to millions of users.

Need more control? Check out Gloo Edge. It's great for complex, multi-cloud setups and centralized management of global routing.

When choosing a cloud API gateway, consider:

- Expected request volume

- User locations

- Your existing cloud services

Comparing the 7 Methods

Let's break down how each API gateway scaling technique stacks up:

| Method | Ease of Use | Ability to Grow | System Reliability |

|---|---|---|---|

| Load Balancing | High | High | Medium |

| Adding More Servers | Medium | High | High |

| Smart Caching | High | Medium | Medium |

| Limiting Requests | High | Low | High |

| Using Circuit Breakers | Medium | Medium | High |

| Health Checks and Backup Systems | Medium | High | High |

| Cloud-Based Setup | High | High | Medium |

Load Balancing is a breeze to set up and great for growth. But watch out - it might hiccup on reliability.

Adding More Servers is like building muscle. It takes work, but you'll end up strong and flexible. Big players like Facebook and Google swear by this method.

Smart Caching is your friendly neighborhood helper. It's easy to use and keeps things running smoothly. Just don't expect it to handle a sudden population boom.

Limiting Requests is like putting up a "No Vacancy" sign. It's simple and keeps your system stable, but it's not exactly rolling out the welcome mat for growth.

Circuit Breakers need some elbow grease to set up, but they're champs at keeping your system on its feet. They're the secret sauce in microservices recipes.

Health Checks and Backup Systems are like having a personal trainer and a spare tire. They take some effort to set up, but they'll keep you running strong and ready for anything.

Cloud-Based Setup is the "easy button" of scaling. It's simple and can grow big, but it might leave you hanging 4.5 hours a year. (That's AWS API Gateway's 99.95% uptime promise.)

Remember, there's no one-size-fits-all solution. Take DAZN, for example. They found AWS API Gateway's 1.8 million new WebSocket connections per hour too tight for their sports streaming needs.

For the complex stuff, consider tools like Gloo Edge. It's like a Swiss Army knife for multi-cloud setups, offering more control and flexibility when you need to scale up big time.

Wrap-up

Let's recap the 7 API gateway scaling techniques:

- Load Balancing

- Adding More Servers

- Smart Caching

- Limiting Requests

- Using Circuit Breakers

- Health Checks and Backup Systems

- Cloud-Based Setup

Combining these methods is where the magic happens. Here's why:

It's all about layers. If one method fails, others step in. Edge Stack API Gateway, for example, runs multiple instances across different Kubernetes nodes. No single point of failure.

Different methods shine in different situations. Load balancing spreads traffic, while circuit breakers stop failures from spreading in microservices.

Speed and stability get a boost. Mix caching with request limiting, and you're cooking with gas. AWS API Gateway can handle 10,000 requests per second, with an extra 5,000 for bursts.

Check out how these methods team up:

| Combo | What It Does |

|---|---|

| Load Balancing + Health Checks | Only healthy servers get traffic |

| Caching + Request Limiting | Less backend stress, protection from traffic spikes |

| Cloud Setup + Auto-scaling | Resources adjust on the fly |

But remember, there's no perfect solution for everyone. DAZN found out the hard way that AWS API Gateway's 1.8 million new WebSocket connections per hour wasn't enough for their sports streaming needs.

Got a complex setup? Look into tools like Gloo Edge. It gives you more control in multi-cloud environments when you need to go big.

FAQs

How do you scale up an API gateway?

Scaling an API gateway isn't rocket science. Here's what you need to do:

- Test your limits

- Set your baselines

- Keep an eye on speed

- Spread the load

Take AWS API Gateway. It can handle 10,000 requests per second, plus 5,000 more for bursts. But DAZN, a sports streaming service, needed way more. They were dealing with over 1.8 million new WebSocket connections every hour!

Is API Gateway high availability?

You bet. API Gateways are built to keep running. Here's why:

- They're spread out across different nodes

- If one fails, others pick up the slack

- The whole system stays up even if some parts go down

AWS API Gateway promises 99.95% uptime. That's only 4.5 hours of downtime allowed per year. Not bad, right?

But here's the catch:

AWS doesn't guarantee perfect data integrity all the time. You might see brief disconnections. And you might need to add some extra logic to prevent losing messages or getting them out of order.

| Feature | What it does |

|---|---|

| Multiple instances | No single point of failure |

| Spread out load | Better performance and reliability |

| Health checks | Traffic avoids problem areas automatically |

Just remember: High availability doesn't mean flawless. Always have a plan B for when things go sideways.