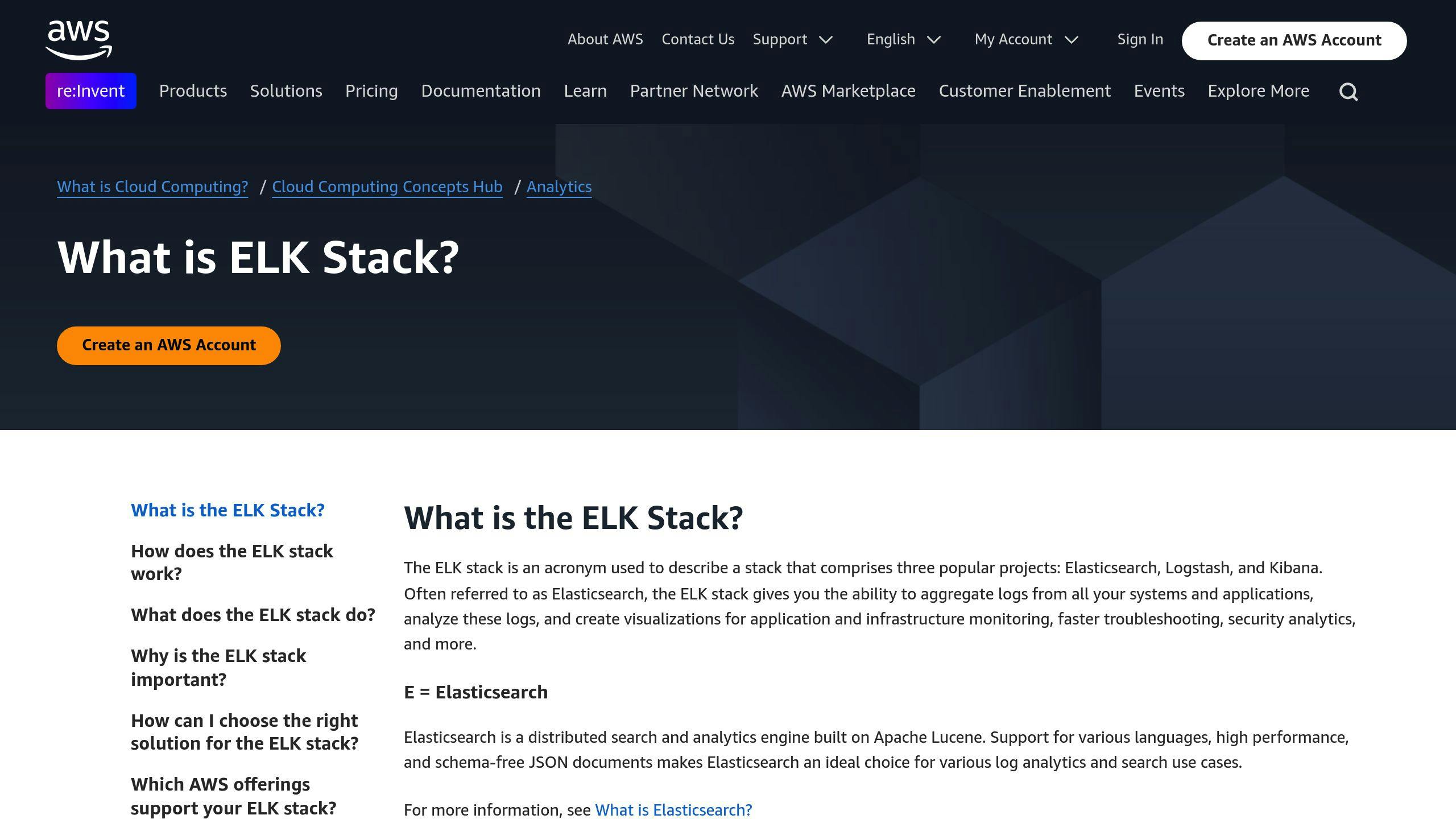

The ELK Stack (Elasticsearch, Logstash, Kibana) makes it easier to detect unusual patterns in IT data. It uses machine learning to identify anomalies in real-time and provides tools for analysis. Here's a quick overview:

-

Why ELK for Anomaly Detection?

- Real-time monitoring of system performance and security.

- Automated learning to detect irregularities.

- Scalable and integrates seamlessly with IT systems.

-

Steps to Set Up Anomaly Detection:

- Install and configure Elasticsearch, Logstash, and Kibana.

- Prepare and preprocess data using Logstash (e.g., filter fields, format timestamps).

- Create machine learning jobs in Kibana for single or multi-metric analysis.

- Fine-tune settings like bucket spans, anomaly thresholds, and influencers.

-

Analyzing Results:

- Use Kibana's Anomaly Explorer to view anomalies with severity scores.

- Focus on metrics like anomaly scores, influencer scores, and actual vs. expected values.

-

Alerts and Automation:

- Set up alerts in Kibana or use Watcher for custom triggers.

- Automate responses with tools like Eyer.ai for faster issue resolution.

Early anomaly detection helps avoid system disruptions and improves IT performance. Start by configuring the ELK Stack, refining machine learning models, and integrating alerts for proactive monitoring.

How to Set Up Anomaly Detection in the ELK Stack

Installing and Setting Up the ELK Stack

To get started with anomaly detection, you'll need to install the three main components of the ELK Stack:

- Elasticsearch: Handles search and analytics.

- Logstash: Manages data processing.

- Kibana: Provides visualization and management tools.

Make sure you have an Elastic Platinum or Enterprise license, as these are required for machine learning features [2]. Also, check that your system meets the necessary resource requirements. Once the ELK Stack is up and running, you can move on to preparing your data for analysis.

Preparing Data for Analysis

Logstash is key to structuring your data pipeline for anomaly detection. Here's how you can organize it effectively:

Data Ingestion Options:

- Use Logstash to preprocess and transform data, such as filtering fields or formatting timestamps.

- Employ Beats for lightweight data collection and shipping.

- Apply Logstash filters to clean and structure your data.

Standardizing timestamps and extracting key fields are crucial. Use the date filter in Logstash to handle timestamp formatting and the grok filter to pull out specific fields from your log data [4]. Properly structured data is essential for accurate anomaly detection.

Once your data is processed and ready, you can set up machine learning jobs in Kibana.

Creating Machine Learning Jobs in Kibana

Go to the Machine Learning section in Kibana to create and manage your anomaly detection jobs [2].

Setting Up the Job:

- Select between single-metric or multi-metric analysis.

- Configure detectors with aggregation functions and specify the time field for analysis.

- Define the bucket span to analyze data over specific time intervals.

Fine-Tuning Detection:

- Adjust anomaly scoring thresholds to improve accuracy.

- Identify influencers that might impact your metrics.

- Create custom rules to account for expected variations.

You can also use Kibana's sample datasets to test and refine your configurations before applying them to your production environment [3]. This ensures your setup is optimized for detecting anomalies effectively.

How to detect anomalies in logs, metrics, and traces to reduce MTTR with Elastic Machine Learning

How to Analyze Anomaly Detection Results

Once your anomaly detection jobs are set up, it's important to know how to interpret the results to make informed decisions.

Navigating the Anomaly Explorer

The Anomaly Explorer in Kibana is your go-to tool for digging into anomalies. It features both timeline and table views to help you examine irregularities in your data. Start by selecting your job, using the timeline to identify clusters of unusual activity, and then switching to the table view for a closer look at specific events.

The timeline uses color-coded severity markers to highlight periods of unusual activity, making it easier to spot critical issues [2]. After identifying anomalies, focus on the provided metrics and visualizations to understand their relevance and impact.

Understanding Metrics and Visualizations

Here are some key metrics to focus on during your analysis:

- Anomaly Score: Ranges from 0 to 100. Scores above 75 indicate issues that require immediate attention.

- Influencer Score: Highlights factors contributing to the anomaly.

- Actual vs. Expected: Shows how observed values differ from predictions, helping you gauge the extent of the deviation.

To dive deeper, use the Single Metric Viewer. This tool offers a detailed visual breakdown of your data trends and automatically marks periods with missing data, ensuring you don't lose context during your analysis [2][1].

Improving Detection Accuracy

To refine your results, tweak the bucket spans and detector functions so they align better with your data's unique patterns and your specific use case. Reduce false positives by applying your domain expertise and regularly reviewing parameters.

The Job Management pane is another helpful resource. It provides metrics on model performance and false positive rates, allowing you to monitor and enhance detection accuracy over time [2].

sbb-itb-9890dba

Tips for Effective Anomaly Detection in the ELK Stack

Choosing the Right Data and Job Types

Begin with single-metric jobs to monitor specific metrics, such as CPU usage. For a more comprehensive view, use multi-metric jobs to analyze related metrics like CPU usage and memory consumption together. This can help you spot system-wide issues that might not be obvious when metrics are viewed in isolation [1][2].

Leveraging Influencers and Multi-Metric Jobs

Pick metrics that work well together, align bucket spans with your data's behavior, and set thresholds based on what's normal for your system. Use fields like IP addresses or user IDs as influencers to pinpoint the source of anomalies effectively [1][2].

Multi-metric jobs go a step further by analyzing related metrics simultaneously. This method helps you uncover complex patterns and relationships that might be missed when metrics are assessed one at a time.

Enhancing Observability with Eyer.ai

Integrating Eyer.ai with the ELK Stack takes your anomaly detection to the next level. It adds features like metrics correlation to identify relationships between data points, root cause detection to zero in on issues, and proactive alerting to address problems before they escalate. Eyer.ai also works with popular data collection tools like Telegraf, Prometheus, and StatsD, ensuring compatibility with your existing setup.

Setting Up Alerts and Automating Actions

Creating Alerts in Kibana

Head to the "Alerts and Actions" section in Kibana, click on "Create alert", and select "Anomaly detection alert." Customize the trigger conditions based on your machine learning (ML) job. You can fine-tune thresholds for business hours versus off-peak times to reduce unnecessary alerts, adjusting sensitivity to align with your operational priorities.

Using Watcher for Custom Alerts

For more complex scenarios, use Watcher to craft custom alerts. You can create watches through the Dev Tools console or the Watcher UI. Define specific triggers (like time intervals or certain events), set up conditions that combine multiple metrics, and outline actions such as sending emails or triggering webhooks. Watcher also allows you to build composite conditions, so alerts only fire when anomalies occur across multiple systems within a set timeframe [4].

Automating Responses with External Tools

External tools can take your anomaly detection to the next level by adding advanced automation. For example, integrating Eyer.ai enables features like incident management (e.g., automatic ticket creation), root cause analysis (using Eyer.ai's correlation engine), and automated responses (e.g., triggering immediate actions via Boomi).

While automation is great for handling routine issues, critical anomalies still benefit from human oversight. This combined approach ensures quick responses while leaving complex problems to skilled professionals [4].

Summary and Next Steps

Key Steps for Setting Up Anomaly Detection

To get started with anomaly detection in the ELK Stack, make sure Elasticsearch is configured with enough resources, prepare well-indexed and reliable data, and set up machine learning jobs specific to your needs. Be sure to include strong alerting systems so you can quickly address critical anomalies when they arise.

Why Early Anomaly Detection Matters

Spotting anomalies early helps avoid system disruptions, shortens the time it takes to identify problems (MTTD), and speeds up resolution (MTTR). Using real-time monitoring combined with machine learning allows you to catch potential issues before they grow into larger problems. Together, these tools improve system stability and operational performance.

Taking Your Monitoring Strategy Further

The ELK Stack is a solid starting point, but adding tools like Eyer.ai can take your monitoring capabilities to the next level. To get the most out of this approach, focus on:

- Fine-tuning detection models and thresholds

- Expanding infrastructure to handle growing monitoring needs

- Training your team to use advanced features effectively

Elastic provides tutorials and datasets for advanced use cases [3], offering a great way to gain hands-on experience with anomaly detection. By combining the ELK Stack's core tools with additional resources, you can create a more responsive and well-rounded monitoring system.

FAQs

How do I enable machine learning in Kibana?

To get started with machine learning in Kibana, here’s what you need to do:

- Set Up Security Privileges: Make sure Elasticsearch security privileges are configured for your users.

- Assign ML Roles: Decide on the level of access needed. Use the

machine_learning_adminrole for full control or themachine_learning_userrole for essential tasks [1][2].

Key Points to Remember:

- Verify that your Elastic license includes machine learning. Check access on the Machine Learning page in Kibana [2].

- Manage machine learning tasks through the Job Management pane [3].

Proper role assignment is crucial for security and smooth operations. Once enabled, you can create detection jobs and dive into anomaly analysis.

Related posts

- Unveiling the Power of Anomaly Detection in the ELK Stack: A Comprehensive Guide

- Anomaly Detection in the ELK Stack: Tools and Techniques for Effective Monitoring

- Leveraging Anomaly Detection in the ELK Stack: A Step-by-Step Approach

- Anomaly Detection for the ELK Stack: Optimizing Performance and Security