Real-time data stream processing analyzes data instantly as it arrives, without storing it first. This guide covers:

- What stream processing is and why it matters

- How to build scalable systems

- Key tools and technologies

- Best practices for performance and monitoring

Quick comparison of popular stream processing tools:

| Tool | Best For | Processing Model | Latency |

|---|---|---|---|

| Kafka | Data ingestion | Record-at-a-time | Very low |

| Flink | Complex events | Event-driven | Lowest |

| Spark | Large-scale analytics | Micro-batch | Low |

| Storm | Real-time computation | Record/micro-batch | Very low |

Key takeaways:

- Use distributed systems to handle high data volumes

- Process data in parallel for speed

- Design for fault-tolerance and even workload distribution

- Monitor performance metrics closely

- Test scalability regularly and update systems frequently

Companies like Netflix and UPS use stream processing to analyze millions of events per second, enabling real-time recommendations and efficient operations.

Related video from YouTube

Basics of Real-Time Data Stream Processing

Real-time data stream processing is about handling data as it flows in. No waiting to store it first. Let's break it down:

Key Concepts

- Streams: Non-stop data flows

- Events: Single data points in a stream

- Processing nodes: Parts that analyze or change data

What Makes Real-Time Data Streams Special?

- Speed: Data moves FAST. We're talking millisecond-level processing.

- No breaks: Unlike batch processing, this data never stops coming.

Batch vs. Stream: The Showdown

| Feature | Batch | Stream |

|---|---|---|

| Data handling | Big chunks, set times | As it comes in |

| Speed | Slower (minutes to hours) | Faster (milliseconds to seconds) |

| Best for | Big reports, data overhauls | Instant insights, catching fraud |

| Setup | Easier | Trickier (it's real-time, after all) |

Stream processing is your go-to for quick insights. Take Netflix. They use it to analyze what you're watching and suggest shows on the spot. It's so good that 75% of what people watch comes from these real-time picks.

Batch processing? It's great for stuff that can wait, like nightly sales reports.

Want a real-world example? Look at UPS. Their package tracking crunches millions of updates every second. This real-time magic has cut fuel use by 10% and sped up deliveries.

"Stream processing lets companies act on data as it happens. It's a game-changer in fast-paced industries."

That's why more businesses are jumping on the stream processing train for their critical ops. It's all about staying ahead of the curve.

Common Scalability Issues in Stream Processing

Stream processing systems face big challenges when handling tons of real-time data. Here's what you need to know:

Dealing with Large Data Amounts

As data piles up, systems can struggle. This often means:

- Slower processing

- More resource use

- Higher costs

BMO Canada tackled this by finding bottlenecks. They looked at CPU and memory stats and fixed slow data transformation. This one change boosted their whole system.

Processing Fast-Moving Data

Speed is crucial. Systems must handle data as it comes in - sometimes millions of events per second.

UPS tracks packages in real-time, processing millions of updates every second. This cut their fuel use by 10% and sped up deliveries.

To manage high-speed data:

- Process in parallel

- Spread work across multiple nodes

- Use in-memory processing (like Apache Spark)

Handling Different Data Types

Stream processing often deals with various data sources and formats. This can slow things down and cause errors.

| Data Type | Challenge | Solution |

|---|---|---|

| Structured | Needs consistent schema | Use schema registry |

| Unstructured | Hard to parse | Flexible parsing |

| Semi-structured | Inconsistent format | Adaptive processing |

Adjusting to Changing Data Speeds

Data flow isn't always steady. Systems need to handle:

- Sudden data spikes

- Slow periods

- Varying arrival rates

To manage this:

1. Scale dynamically

Add or remove processing power automatically based on needs.

2. Use backpressure

Slow down data intake when it's coming in too fast.

3. Load shedding

In extreme cases, drop some low-priority data to keep things running.

Building Scalable Stream Processing Systems

Want to handle tons of data in real-time? Here's how to build systems that can grow:

Using Distributed Systems

Distributed systems spread work across multiple computers. This helps:

- Handle more data

- Keep running if parts fail

Apache Spark is a popular choice. It's FAST - processing data up to 100 times quicker than old-school methods.

Parallel Processing Approaches

Processing in parallel speeds things up. Two main ways:

- Split data into chunks

- Run different tasks at the same time

Apache Flink uses both. In tests, it handled 1,800 events per second with less than 10 ms latency. That's quick!

Keeping Systems Running When Things Go Wrong

Stuff breaks. Here's how to stay up:

- Save system state regularly

- Have backup nodes ready

- Use data sources that can replay info

Kafka's a good pick for that last point.

Spreading the Workload Evenly

Uneven workloads? That's a problem. To fix it:

- Watch processing load

- Spot imbalances

- Redistribute work

A study on Apache Spark showed dynamic load balancing worked better as data got messier.

| Approach | What's Good |

|---|---|

| Static | Easy setup |

| Dynamic | Adjusts on the fly |

| Hybrid | Best of both worlds |

"Dynamic LB STAL mode crushed it on throughput and latency compared to static modes, especially with skewed data." - Apache Spark study

Bottom line: Build smart, spread the work, and plan for hiccups. Your stream processing system will thank you.

Tools for Scalable Stream Processing

Let's dive into some key tools for handling big data streams:

Apache Kafka

Kafka is the go-to for managing data at scale. It's open-source, distributed, and fault-tolerant. Plus, it plays nice with other tools like Flink and Storm.

Apache Flink

Flink's your guy for complex event processing. It handles out-of-order events, does complex event processing, and manages large stateful computations. And it's FAST - we're talking millions of events in milliseconds.

Apache Spark Streaming

Part of the Spark ecosystem, this tool is known for in-memory computing and handling both batch and stream processing. Bonus: it supports multiple programming languages.

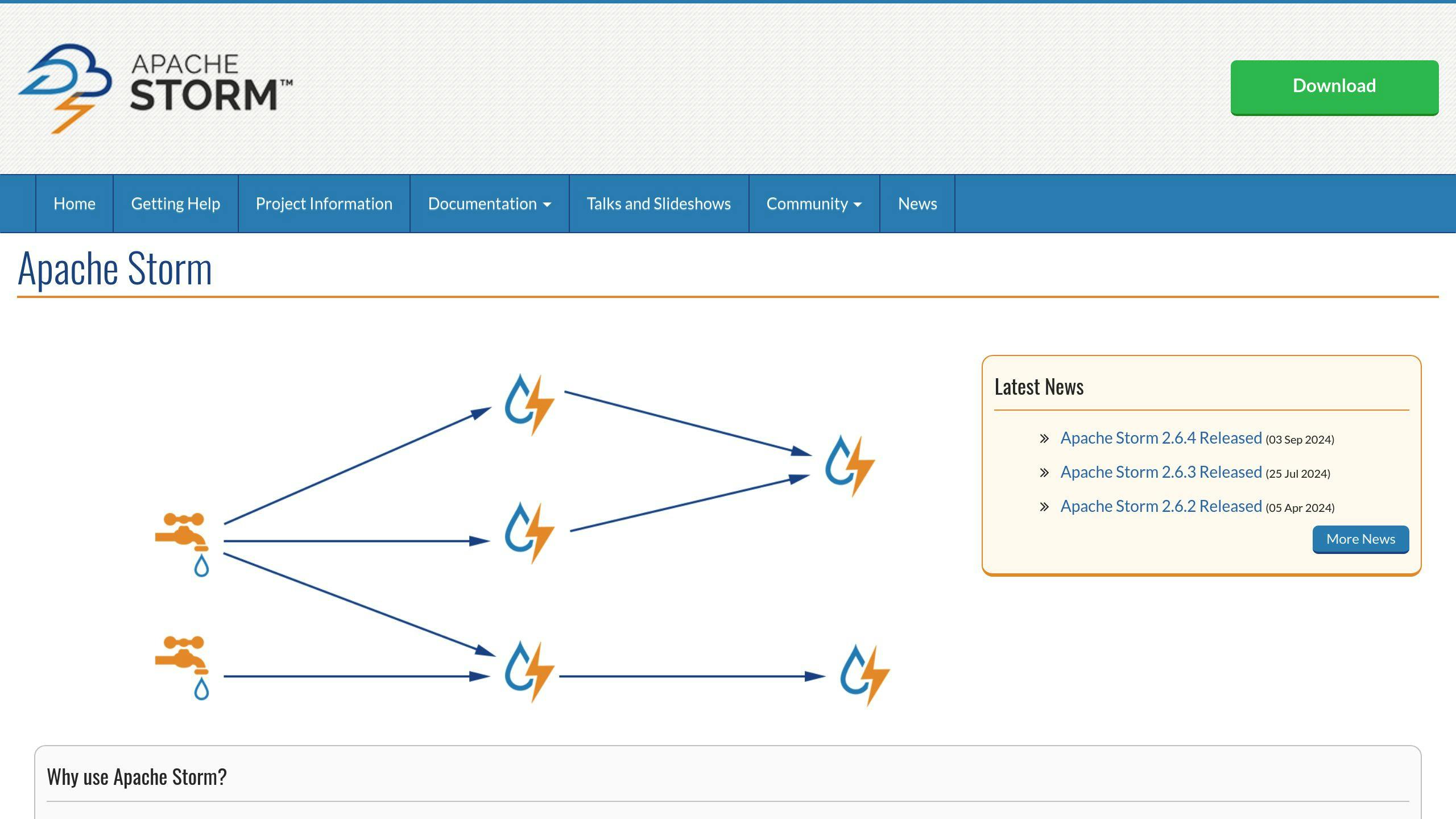

Apache Storm

Storm's all about real-time data processing. It's built for low latency, distributed real-time calculations, and flexible processing with micro-batches.

Tool Comparison

Here's a quick look at how these tools stack up:

| Tool | Best For | Processing Model | Latency |

|---|---|---|---|

| Kafka | Data ingestion, message bus | Record-at-a-time | Very low |

| Flink | Complex event processing | Event-driven | Lowest |

| Spark | Large-scale data analytics | Micro-batch | Low |

| Storm | Real-time computation | Record or micro-batch | Very low |

Your choice? It depends on what you need.

"Kafka Streams is one of the leading real-time data streaming platforms and is a great tool to use either as a big data message bus or to handle peak data ingestion loads." - Tal Doron, Director of Technology Innovation at GigaSpaces

Getting and Storing Data in Scalable Systems

Handling High-Volume Data Streams

When you're dealing with a ton of incoming data, you need smart ways to manage it. Here's how:

- Split data across multiple processors

- Balance the load evenly

- Group messages into micro-batches

Think about stock trading systems. They need to crunch MASSIVE amounts of data FAST. So, they use micro-batching to analyze price changes in split-second windows. This lets them make trades at lightning speed.

Storing Data Across Multiple Computers

Big data needs big storage. Here are some options:

- Apache Kafka and Pulsar: Great for scalable, fault-tolerant storage

- Cloud solutions: Think Amazon S3 or Google BigQuery

- Time-series databases: Perfect for time-stamped data

Quick Access with Computer Memory

Want to speed things up? Use in-memory storage:

- Keep data in RAM before processing

- Cache frequently accessed data

- Process data directly in RAM

Apache Spark does this. Result? It's up to 100 times faster than traditional big data solutions. That's FAST.

Splitting Up Data

Partitioning data is key for parallel processing. Here's a quick breakdown:

| Method | What it does | When to use it |

|---|---|---|

| Hash-based | Spreads data evenly | For general even distribution |

| Range-based | Splits into ranges | Great for time-series data |

| List-based | Uses predefined lists | Perfect for geographical data |

| Composite | Combines methods | For complex data structures |

Pick the method that fits your data and how you'll use it.

"Get your Kafka topics and OpenSearch Compute Units in sync, and you'll see your data processing efficiency skyrocket." - AWS Documentation

Processing Methods for Better Scalability

Stream processing systems use different methods to handle big data fast. Here's how:

Processing Data in Time Windows

Time windows break continuous data streams into chunks. It's like slicing a long ribbon into manageable pieces.

Four main window types:

| Window Type | What It Does | When to Use It |

|---|---|---|

| Tumbling | Fixed-size, no overlap | Counting website visits per minute |

| Hopping | Fixed-size, overlapping | Spotting weird patterns as they happen |

| Sliding | Moves with new events | Keeping trend analysis fresh |

| Session | Groups by activity | Tracking how people shop online |

To Remember or Not to Remember

Your system can be:

- Stateless: Processes each data bit alone. Easy to scale, but limited.

- Stateful: Remembers past data. Good for complex tasks, trickier to scale.

Processing in Small Batches

Micro-batch processing is the middle child between batch and stream processing. It handles data in small groups, often every few seconds.

"Spark Streaming's sweet spot starts at 50 milliseconds batches. True stream processing? We're talking single-digit milliseconds." - Apache Spark docs

Use this when you need quick results but don't need instant processing for every single event.

Event Time vs. Processing Time

There's often a gap between when something happens and when we process it. This matters, especially for time-sensitive stuff.

You can use:

- Event time: When it actually happened

- Processing time: When your system deals with it

Pick based on your needs. For fraud detection, event time is crucial.

"A big credit card company processes core data for fraud detection in 7 milliseconds. That's stream processing for you." - Industry Report on Real-Time Processing

sbb-itb-9890dba

Ways to Make Systems Bigger

As data streams grow, you need to beef up your processing systems. Here's how:

Adding More Computers

Horizontal scaling is the go-to for big data streams. It's simple: add more machines.

Take Apache Kafka. It can handle thousands of messages per second across multiple computers. That's why it's a hit with over 60% of Fortune 500 companies.

A major e-commerce company used Kafka to handle order floods during sales peaks. They just added servers as needed, keeping things smooth even when swamped.

Making Existing Computers Stronger

Sometimes, you need more juice in each machine. That's vertical scaling.

MongoDB Atlas is great for this. You can add CPU or RAM to your database servers without downtime. Perfect for those sudden data processing spikes.

Systems That Grow and Shrink Automatically

Auto-scaling is a game-changer. Your system adapts to changing workloads on its own.

Ververica Cloud, built on Apache Flink, does this well. A shipping company used it for real-time delivery updates. As they grew, Ververica Cloud automatically added resources to keep things zippy.

Flexible Systems That Change with Demand

The best systems scale both ways - up when busy, down when quiet.

PASCAL, a new auto-scaling system, does this smartly. It uses machine learning to predict workloads and adjust resources. In tests with Apache Storm, it cut costs without sacrificing performance.

Here's a quick comparison:

| Method | Pros | Cons | Best For |

|---|---|---|---|

| Horizontal | Nearly unlimited growth | Can be complex | Large, distributed systems |

| Vertical | Simple, quick boost | Limited by hardware | Smaller, specific upgrades |

| Auto-scaling | Adapts to demand | Needs careful setup | Variable workloads |

| Flexible | Cost-effective | Requires advanced tech | Unpredictable data streams |

Making Systems Work Better

Want to supercharge your stream processing system? Focus on these four areas:

Pack and Compress Data

Squeezing data is key. It speeds up transfers and saves space. Check this out:

Netflix shrunk their data by up to 1,000 times. Result? Way faster stats crunching.

For streaming data, try these compression tricks:

| Algorithm | Speed | Space Saving | Best Use |

|---|---|---|---|

| Snappy | Fast | Low | Quick processing |

| gzip | Slow | High | Storage |

| LZ4 | Medium | Medium | All-rounder |

| Zstandard | Flexible | High | Customizable |

Cache Hot Data

Storing frequently used data nearby? Game-changer. Take Kafka:

It batches messages for the same partition. This cranks up throughput.

To nail caching:

- Keep hot data in memory

- Set up tiered caching

- Keep cached data fresh

Speed Up Queries

Fast queries = happy system. Here's how:

1. Smart indexing

Create indexes for your go-to fields.

2. Partition data

Split it up for parallel processing.

3. Tune query plans

Analyze and tweak how your system runs queries.

Manage Resources Smartly

Resource management is crucial. Kafka's a pro at this:

It can handle millions of messages per second when set up right.

To optimize:

- Keep an eye on performance

- Adjust resources as needed

- Use auto-scaling for traffic spikes

Remember: These tweaks work together. Implement them all for best results.

Watching and Managing Large Stream Processing Systems

As stream processing systems grow, monitoring becomes key. Here's how to keep tabs on these systems effectively:

Key Metrics to Watch

Focus on these metrics:

- Throughput: Messages processed per second

- Latency: Time from ingestion to processing

- Consumer lag: Gap between latest and last processed message

- Resource usage: CPU, memory, and network use

Effective Monitoring Tools and Practices

1. Use specialized tools

Datadog's Data Streams Monitoring (DSM) offers:

- Automatic mapping of service and queue dependencies

- End-to-end latency measurements

- Lag metrics in seconds and offset

2. Set up alerts

Monitor for:

- Unusual latency

- Abnormal throughput

- Message backups

3. Implement continuous validation

Constantly check data movement from source to target.

Spotting and Solving Issues

When problems pop up:

- Find the source using tools like DSM

- Check message backup volumes to prioritize fixes

- Set up auto-relaunch for stuck stateless jobs

"Data Streams Monitoring helps us find performance bottlenecks and optimize stream processing for max throughput and low latency." - Darren Furr, Solutions Architect at MarketAxess

Planning for Future Needs

Stay ahead of growth:

- Study past consumer lag data with tools like CrowdStrike's Kafka monitor

- Set up auto-scaling based on consumer lag

- Define clear SLAs for your streaming data infrastructure

Good Practices for Building Big Stream Processing Systems

Design Rules for Growth

When building stream processing systems, think big from day one. Break your pipeline into bite-sized chunks. Why? It's way easier to scale specific parts as you grow.

Take Apache Kafka. They split data into partitions. This lets them process in parallel and balance loads better. The result? LinkedIn used Kafka to handle a mind-boggling 7 trillion messages daily in 2019.

Testing How Well Systems Grow

Want to know if your system can handle the heat? Test it. Regularly. Use tools that can throw millions of events at your system per second.

Apache Samza's got your back here. Their testing framework can simulate real-world conditions. It's like a stress test for your system, but with data instead of treadmills.

Updating Stream Processing Systems Regularly

Keep your system fresh. Use CI/CD practices for smooth updates. It's like giving your car regular oil changes - keeps everything running smoothly.

Uber's AthenaX platform is a pro at this. They push updates multiple times a day without breaking their 24/7 operations. It's like changing a tire while the car's still moving.

Keeping Big Systems Safe

Big systems need big security. Encrypt your data. All of it. In transit and at rest.

Netflix gets this. They process billions of events daily and use TLS encryption for all data in transit. They're also big on access controls and regular audits. It's like having a bouncer and a security camera for your data.

| Best Practice | Example | Benefit |

|---|---|---|

| Modular Design | Kafka's partitioning | Scale specific parts easily |

| Regular Testing | Samza's testing framework | Catch issues early |

| Continuous Updates | Uber's AthenaX deployment | Smooth, frequent updates |

| Strong Security | Netflix's encryption and audits | Keep data safe |

Real Examples

How Companies Built Big Stream Processing Systems

Netflix changed their streaming service using big data tech. They built a system with Apache Kafka, Apache Flink, and AWS to handle 200 million+ subscribers in over 190 countries.

Their recommendation system uses machine learning to analyze viewing habits and streaming data. This personalization keeps subscribers around longer.

"Netflix is a prime example of a company that used big data to transform its business." - VivekR, Medium author

Ciena, a telecom equipment supplier, upgraded their analytics using Striim. They use Snowflake for data storage and Striim to copy data changes, processing about 100 million events daily. This upgrade sped up accounting and manufacturing.

| Company | Tech Used | Data Processed | Result |

|---|---|---|---|

| Netflix | Kafka, Flink, AWS | 200M+ subscribers | Better recommendations |

| Ciena | Striim, Snowflake | 100M events/day | Faster business processes |

What We Learned

1. Scale matters

Netflix shows why building systems that grow with users is crucial. Their setup handles millions of streams easily.

2. Real-time is key

Ciena processes 100 million events daily. This real-time data helps them make quick decisions and work more efficiently.

3. Personalization works

Netflix's recommendations prove that analyzing real-time data can improve user experiences and business results.

4. Integration is powerful

Ciena uses Striim to connect data sources to Snowflake. This shows how combining tools can create a better data system.

5. Data drives decisions

Both examples show how using big data can guide strategy and boost business growth.

What's Next for Big Stream Processing

The future of big stream processing is looking bright. Here's what's coming:

New Tools and Methods

- Serverless Stream Processing

No more coding headaches. Fully managed solutions like Confluent Cloud's serverless Flink Actions are making stream processing a breeze.

AI is shaking things up. It's now possible to analyze datasets in real-time, catching patterns and issues on the fly.

- Edge Computing Integration

Processing data closer to its source? It's happening. This cuts delays and saves bandwidth, which is huge for IoT devices.

| Tech | What It Does |

|---|---|

| Serverless | Easy setup, no coding |

| AI Automation | Real-time analysis |

| Edge Computing | Less delay, saves bandwidth |

How It's Changing

The stream processing world is evolving fast:

- Big Growth

By 2025, the streaming analytics market could hit $39 billion. That's a 29% growth rate from 2018 to 2025.

- New Databases

Databases built for real-time processing are popping up. They'll make handling live data streams a lot easier for businesses.

This combo is set to supercharge stream processing. Expect faster data crunching and snappier apps.

In healthcare, for example, this could power wearables and sensors that deliver quicker, cheaper patient care.

- AI and ML Taking Center Stage

AI and machine learning are becoming key players. In fact, 75% of businesses see them as the main reason to adopt streaming data in the next two years.

"Companies want real-time data for their apps, analytics, and AI/ML models. This means switching from old-school batch processing to streaming systems that handle tons of data per second." - Redpanda Report Authors

As these changes roll out, businesses will need to step up their game to make the most of real-time data processing.

Wrap-Up

We've covered a lot about real-time data stream processing. Let's boil it down to the essentials:

Real-time processing analyzes data on the fly. It's fast, scalable, and keeps running even when things go wrong. You need four main parts to make it work:

| Component | What it does |

|---|---|

| Processing Engine | Handles the data stream |

| Storage | Keeps the data safe |

| Messaging Systems | Moves the data around |

| Visualization Tools | Shows what the data means |

Why does scalability matter? Simple:

1. It handles more data

As your data grows, your system grows with it. No need for a complete overhaul.

2. It keeps things fast

More data doesn't mean slower processing. Scalable systems keep up the pace.

3. It saves money

You use only what you need, when you need it. That's good for your wallet.

4. It's ready for the future

Whatever comes next, your scalable system can handle it.

Real companies are already using this stuff:

"John Deere streams data from tens of thousands of farming vehicles in real-time to optimize food production."

That's a LOT of tractors sending data all at once.

"Hearst built a clickstream analytics solution to transmit and process 30 terabytes of data a day from 300+ websites worldwide."

Imagine trying to handle all those clicks without a scalable system!